mirror of

https://github.com/open-webui/docs.git

synced 2026-01-04 10:46:26 +07:00

chore: format

This commit is contained in:

@@ -4,18 +4,19 @@ title: "🛌 Integrate with Amazon Bedrock"

|

||||

---

|

||||

|

||||

:::warning

|

||||

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

|

||||

|

||||

:::

|

||||

|

||||

---

|

||||

|

||||

# Integrating Open-WebUI with Amazon Bedrock

|

||||

## Integrating Open-WebUI with Amazon Bedrock

|

||||

|

||||

In this tutorial, we'll explore one of the most common and popular approaches to integrate Open-WebUI with Amazon Bedrock.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

|

||||

In order to follow this tutorial, you must have the following:

|

||||

|

||||

- An active AWS account

|

||||

@@ -23,7 +24,6 @@ In order to follow this tutorial, you must have the following:

|

||||

- IAM permissions in AWS to enable Bedrock models or already enabled models

|

||||

- Docker installed on your system

|

||||

|

||||

|

||||

## What is Amazon Bedrock

|

||||

|

||||

Direct from AWS' website:

|

||||

@@ -32,24 +32,22 @@ Direct from AWS' website:

|

||||

|

||||

To learn more about Bedrock, visit: [Amazon Bedrock's Official Page](https://aws.amazon.com/bedrock/)

|

||||

|

||||

# Integration Steps

|

||||

## Integration Steps

|

||||

|

||||

## Step 1: Verify access to Amazon Bedrock Base Models

|

||||

|

||||

Before we can integrate with Bedrock, you first have to verify that you have access to at least one, but preferably many, of the available Base Models. At the time of this writing (Feb 2025), there were 47 base models available. You can see in the screenshot below that I have access to multiple models. You'll know if you have access to a model if it says "✅ Access Granted" next to the model. If you don't have access to any models, you will get an error on the next step.

|

||||

|

||||

AWS provides good documentation for request accessing / enabling these models here: [Amazon Bedrock's Model Access Docs](https://docs.aws.amazon.com/bedrock/latest/userguide/model-access-modify.html)

|

||||

AWS provides good documentation for request accessing / enabling these models in the [Amazon Bedrock's Model Access Docs](https://docs.aws.amazon.com/bedrock/latest/userguide/model-access-modify.html)

|

||||

|

||||

|

||||

|

||||

|

||||

## Step 2: Configure the Bedrock Access Gateway

|

||||

|

||||

Now that we have access to at least one Bedrock base model, we need to configure the Bedrock Access Gateway, or BAG. You can think of the BAG as kind of proxy or middleware developed by AWS that wraps around AWS native endpoints/SDK for Bedrock and, in turn, exposes endpoints that are compatible with OpenAI's schema, which is what Open-WebUI requires.

|

||||

|

||||

For reference, here is a simple mapping between the endpoints:

|

||||

|

||||

|

||||

| OpenAI Endpoint | Bedrock Method |

|

||||

|-----------------------|------------------------|

|

||||

| `/models` | list_inference_profiles |

|

||||

@@ -57,9 +55,10 @@ For reference, here is a simple mapping between the endpoints:

|

||||

| `/chat/completions` | converse or converse_stream |

|

||||

| `/embeddings` | invoke_model |

|

||||

|

||||

The BAG repo can be found here: [Bedrock Access Gateway Repo](https://github.com/aws-samples/bedrock-access-gateway)

|

||||

The BAG repo can be found in the [Bedrock Access Gateway Repo](https://github.com/aws-samples/bedrock-access-gateway)

|

||||

|

||||

To set-up the BAG, follow the below steps:

|

||||

|

||||

- Clone the BAG repo

|

||||

- Remove the default `dockerfile`

|

||||

- Change the name of the `Dockerfile_ecs` to `Dockerfile`

|

||||

|

||||

@@ -3,7 +3,9 @@ title: "Azure AD Domain Services (LDAPS) Integration"

|

||||

---

|

||||

|

||||

:::warning

|

||||

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

|

||||

|

||||

:::

|

||||

|

||||

This guide explains how to integrate Open WebUI with Azure AD Domain Services (AAD DS) for secure LDAP (LDAPS) authentication.

|

||||

@@ -12,32 +14,34 @@ This guide explains how to integrate Open WebUI with Azure AD Domain Services (A

|

||||

|

||||

| Item | Description |

|

||||

| ------------------- | ------------------------------------------------------------------------ |

|

||||

| **Azure Account** | An account with permissions to deploy AAD DS and modify NSGs. |

|

||||

| **OpenSSL 3.x** | Required to generate a self-signed PFX for testing. |

|

||||

| **Azure Account** | An account with permissions to deploy AAD DS and modify NSGs. |

|

||||

| **OpenSSL 3.x** | Required to generate a self-signed PFX for testing. |

|

||||

| **Domain Name** | We'll use `openwebui.onmicrosoft.com` as an example (your AAD primary domain). |

|

||||

| **Open WebUI Server** | A running instance of Open WebUI, deployed via Docker or bare-metal. |

|

||||

| **Open WebUI Server** | A running instance of Open WebUI, deployed via Docker or bare-metal. |

|

||||

|

||||

:::caution

|

||||

|

||||

In a production environment, use a PFX certificate issued by a public Certificate Authority (CA) and set `LDAP_VALIDATE_CERT=true`.

|

||||

|

||||

:::

|

||||

|

||||

## 2. Deploy Azure AD Domain Services

|

||||

|

||||

1. In the Azure Portal, search for and select **Azure AD Domain Services**.

|

||||

2. Click **Create**.

|

||||

3. Select your **Subscription** and **Resource Group** (or create a new one).

|

||||

4. For **DNS domain name**, enter your domain (e.g., `openwebui.onmicrosoft.com`). This value will be used for `LDAP_SEARCH_BASE` later.

|

||||

5. Keep the default settings for **SKU**, **Replica Set**, etc., and click **Review + create**.

|

||||

1. In the Azure Portal, search for and select **Azure AD Domain Services**.

|

||||

2. Click **Create**.

|

||||

3. Select your **Subscription** and **Resource Group** (or create a new one).

|

||||

4. For **DNS domain name**, enter your domain (e.g., `openwebui.onmicrosoft.com`). This value will be used for `LDAP_SEARCH_BASE` later.

|

||||

5. Keep the default settings for **SKU**, **Replica Set**, etc., and click **Review + create**.

|

||||

|

||||

|

||||

|

||||

6. After deployment, navigate to the Azure AD DS blade and note the **Virtual network / Subnet**. If your Open WebUI server is not in the same VNet, you must create an NSG rule to allow traffic on port 636.

|

||||

6. After deployment, navigate to the Azure AD DS blade and note the **Virtual network / Subnet**. If your Open WebUI server is not in the same VNet, you must create an NSG rule to allow traffic on port 636.

|

||||

|

||||

## 3. Find the Secure LDAP External IP

|

||||

|

||||

1. Navigate to your AAD DS Blade → **Overview**.

|

||||

2. Under **Secure LDAP**, find the **External IP addresses**.

|

||||

3. This IP (e.g., `1.222.222.222`) will be your `LDAP_SERVER_HOST` value in the `.env` file.

|

||||

1. Navigate to your AAD DS Blade → **Overview**.

|

||||

2. Under **Secure LDAP**, find the **External IP addresses**.

|

||||

3. This IP (e.g., `1.222.222.222`) will be your `LDAP_SERVER_HOST` value in the `.env` file.

|

||||

|

||||

|

||||

|

||||

@@ -50,15 +54,17 @@ In a production environment, use a PFX certificate issued by a public Certificat

|

||||

| **Format** | PKCS #12 (.pfx) |

|

||||

| **Encryption** | RSA 2048 / SHA-256 |

|

||||

| **Subject Alternative Name (SAN)** | Must include wildcards: `*.{your_domain}.onmicrosoft.com` and `{your_domain}.onmicrosoft.com`. |

|

||||

| **Password** | Required for upload. Make sure to remember it. |

|

||||

| **Password** | Required for upload. Make sure to remember it. |

|

||||

|

||||

:::info

|

||||

|

||||

For production, we recommend using Let’s Encrypt or another public CA. The self-signed process below is for testing purposes only.

|

||||

|

||||

:::

|

||||

|

||||

### 4.2. Generate a Wildcard PFX with OpenSSL (for testing)

|

||||

|

||||

1. **Create `openssl_wildcard.cnf`:**

|

||||

1. **Create `openssl_wildcard.cnf`:**

|

||||

|

||||

```ini

|

||||

[ req ]

|

||||

@@ -95,7 +101,7 @@ For production, we recommend using Let’s Encrypt or another public CA. The sel

|

||||

```

|

||||

*Replace `{your_domain}` with your actual domain.*

|

||||

|

||||

2. **Generate Key & Certificate:**

|

||||

2. **Generate Key & Certificate:**

|

||||

|

||||

```bash

|

||||

# Generate a private key

|

||||

@@ -116,10 +122,10 @@ For production, we recommend using Let’s Encrypt or another public CA. The sel

|

||||

-inkey privatekey_wildcard.key -in certificate_wildcard.crt

|

||||

```

|

||||

|

||||

3. **Upload to AAD DS:**

|

||||

1. Navigate to your AAD DS Blade → **Secure LDAP**.

|

||||

2. Click the **Upload certificate** button, select `certificate_wildcard.pfx`, and enter the password.

|

||||

3. Toggle **Secure LDAP** to **Enabled** and click **Save**.

|

||||

3. **Upload to AAD DS:**

|

||||

1. Navigate to your AAD DS Blade → **Secure LDAP**.

|

||||

2. Click the **Upload certificate** button, select `certificate_wildcard.pfx`, and enter the password.

|

||||

3. Toggle **Secure LDAP** to **Enabled** and click **Save**.

|

||||

|

||||

|

||||

|

||||

@@ -137,15 +143,17 @@ For production, we recommend using Let’s Encrypt or another public CA. The sel

|

||||

|

||||

|

||||

:::warning

|

||||

|

||||

If allowing access from the internet, restrict the source IP range to the minimum required for security. If Open WebUI is in the same VNet, this step can be skipped.

|

||||

|

||||

:::

|

||||

|

||||

## 6. Create a Service Account in Entra ID

|

||||

|

||||

1. In the Azure Portal, navigate to **Entra ID** → **Users** → **New user**.

|

||||

2. Set the username (e.g., `ldap@{your_domain}.onmicrosoft.com`).

|

||||

3. Set a strong password and uncheck **User must change password at next sign-in**.

|

||||

4. Go to the **Groups** tab and add the user to the **AAD DC Administrators** group (required for querying all users).

|

||||

1. In the Azure Portal, navigate to **Entra ID** → **Users** → **New user**.

|

||||

2. Set the username (e.g., `ldap@{your_domain}.onmicrosoft.com`).

|

||||

3. Set a strong password and uncheck **User must change password at next sign-in**.

|

||||

4. Go to the **Groups** tab and add the user to the **AAD DC Administrators** group (required for querying all users).

|

||||

|

||||

|

||||

|

||||

@@ -154,8 +162,11 @@ If allowing access from the internet, restrict the source IP range to the minimu

|

||||

Here is an example configuration for your `.env` file:

|

||||

|

||||

```env

|

||||

|

||||

###############################################

|

||||

|

||||

# LDAP

|

||||

|

||||

###############################################

|

||||

ENABLE_LDAP="true"

|

||||

LDAP_SERVER_LABEL="Azure AD DS"

|

||||

@@ -165,6 +176,7 @@ LDAP_SERVER_PORT="636"

|

||||

# TLS Options

|

||||

LDAP_USE_TLS="true"

|

||||

LDAP_VALIDATE_CERT="false" # Set to true for a public CA

|

||||

|

||||

#LDAP_CA_CERT_FILE="/etc/ssl/certs/openwebui_ca.crt"

|

||||

|

||||

# Bind Account

|

||||

@@ -178,8 +190,11 @@ LDAP_ATTRIBUTE_FOR_MAIL="userPrincipalName"

|

||||

LDAP_SEARCH_FILTER="(&(objectClass=user)(objectCategory=person))"

|

||||

|

||||

# Group Synchronization (Optional)

|

||||

|

||||

# ENABLE_LDAP_GROUP_MANAGEMENT="true"

|

||||

|

||||

# ENABLE_LDAP_GROUP_CREATION="true"

|

||||

|

||||

# LDAP_ATTRIBUTE_FOR_GROUPS="memberOf"

|

||||

```

|

||||

*Replace placeholders like `{your_domain}` and `<STRONG-PASSWORD>` with your actual values.*

|

||||

|

||||

@@ -4,12 +4,14 @@ title: "🔄 Backend-Controlled, UI-Compatible API Flow"

|

||||

---

|

||||

|

||||

:::warning

|

||||

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the [contributing tutorial](/docs/tutorials/tips/contributing-tutorial.md).

|

||||

|

||||

:::

|

||||

|

||||

---

|

||||

|

||||

# Backend-Controlled, UI-Compatible API Flow

|

||||

## Backend-Controlled, UI-Compatible API Flow

|

||||

|

||||

This tutorial demonstrates how to implement server-side orchestration of Open WebUI conversations while ensuring that assistant replies appear properly in the frontend UI. This approach requires zero frontend involvement and allows complete backend control over the chat flow.

|

||||

This tutorial has been verified to work with Open WebUI version v0.6.15. Future versions may introduce changes in behavior or API structure.

|

||||

@@ -49,6 +51,7 @@ This enables server-side orchestration while still making replies show up in the

|

||||

The assistant message needs to be added to the chat response object in memory as a critical prerequisite before triggering the completion. This step is essential because the Open WebUI frontend expects assistant messages to exist in a specific structure.

|

||||

|

||||

The assistant message must appear in both locations:

|

||||

|

||||

- `chat.messages[]` - The main message array

|

||||

- `chat.history.messages[<assistantId>]` - The indexed message history

|

||||

|

||||

@@ -122,8 +125,12 @@ public void enrichChatWithAssistantMessage(OWUIChatResponse chatResponse, String

|

||||

}

|

||||

```

|

||||

|

||||

:::note

|

||||

|

||||

**Note:** This step can be performed in memory on the response object, or combined with Step 1 by including both user and empty assistant messages in the initial chat creation.

|

||||

|

||||

:::

|

||||

|

||||

### Step 3: Update Chat with Assistant Message

|

||||

|

||||

Send the enriched chat state containing both user and assistant messages to the server:

|

||||

@@ -272,9 +279,11 @@ curl -X POST https://<host>/api/chat/completions \

|

||||

Assistant responses can be handled in two ways depending on your implementation needs:

|

||||

|

||||

#### Option A: Stream Processing (Recommended)

|

||||

|

||||

If using `stream: true` in the completion request, you can process the streamed response in real-time and wait for the stream to complete. This is the approach used by the OpenWebUI web interface and provides immediate feedback.

|

||||

|

||||

#### Option B: Polling Approach

|

||||

|

||||

For implementations that cannot handle streaming, poll the chat endpoint until the response is ready. Use a retry mechanism with exponential backoff:

|

||||

|

||||

```java

|

||||

@@ -300,17 +309,18 @@ public String getAssistantResponseWhenReady(String chatId, ChatCompletedRequest

|

||||

For manual polling, you can use:

|

||||

|

||||

```bash

|

||||

|

||||

# Poll every few seconds until assistant content is populated

|

||||

while true; do

|

||||

response=$(curl -s -X GET https://<host>/api/v1/chats/<chatId> \

|

||||

-H "Authorization: Bearer <token>")

|

||||

|

||||

|

||||

# Check if assistant message has content (response is ready)

|

||||

if echo "$response" | jq '.chat.messages[] | select(.role=="assistant" and .id=="assistant-msg-id") | .content' | grep -v '""' > /dev/null; then

|

||||

echo "Assistant response is ready!"

|

||||

break

|

||||

fi

|

||||

|

||||

|

||||

echo "Waiting for assistant response..."

|

||||

sleep 2

|

||||

done

|

||||

@@ -404,6 +414,7 @@ curl -X POST https://<host>/api/v1/chats/<chatId> \

|

||||

Assistant responses may be wrapped in markdown code blocks. Here's how to clean them:

|

||||

|

||||

```bash

|

||||

|

||||

# Example raw response from assistant

|

||||

raw_response='```json

|

||||

{

|

||||

@@ -419,6 +430,7 @@ echo "$cleaned_response" | jq '.'

|

||||

```

|

||||

|

||||

This cleaning process handles:

|

||||

|

||||

- Removal of ````json` prefix

|

||||

- Removal of ```` suffix

|

||||

- Trimming whitespace

|

||||

@@ -797,24 +809,29 @@ This cleaning process handles:

|

||||

#### Required vs Optional Fields

|

||||

|

||||

**Chat Creation - Required Fields:**

|

||||

|

||||

- `title` - Chat title (string)

|

||||

- `models` - Array of model names (string[])

|

||||

- `messages` - Initial message array

|

||||

|

||||

**Chat Creation - Optional Fields:**

|

||||

|

||||

- `files` - Knowledge files for RAG (defaults to empty array)

|

||||

- `tags` - Chat tags (defaults to empty array)

|

||||

- `params` - Model parameters (defaults to empty object)

|

||||

|

||||

**Message Structure - User Message:**

|

||||

|

||||

- **Required:** `id`, `role`, `content`, `timestamp`, `models`

|

||||

- **Optional:** `parentId` (for threading)

|

||||

|

||||

**Message Structure - Assistant Message:**

|

||||

|

||||

- **Required:** `id`, `role`, `content`, `parentId`, `modelName`, `modelIdx`, `timestamp`

|

||||

- **Optional:** Additional metadata fields

|

||||

|

||||

**ChatCompletionsRequest - Required Fields:**

|

||||

|

||||

- `chat_id` - Target chat ID

|

||||

- `id` - Assistant message ID

|

||||

- `messages` - Array of ChatCompletionMessage

|

||||

@@ -822,6 +839,7 @@ This cleaning process handles:

|

||||

- `session_id` - Session identifier

|

||||

|

||||

**ChatCompletionsRequest - Optional Fields:**

|

||||

|

||||

- `stream` - Enable streaming (defaults to false)

|

||||

- `background_tasks` - Control automatic tasks

|

||||

- `features` - Enable/disable features

|

||||

@@ -832,22 +850,27 @@ This cleaning process handles:

|

||||

#### Field Constraints

|

||||

|

||||

**Timestamps:**

|

||||

|

||||

- Format: Unix timestamp in milliseconds

|

||||

- Example: `1720000000000` (July 4, 2024, 00:00:00 UTC)

|

||||

|

||||

**UUIDs:**

|

||||

|

||||

- All ID fields should use valid UUID format

|

||||

- Example: `550e8400-e29b-41d4-a716-446655440000`

|

||||

|

||||

**Model Names:**

|

||||

|

||||

- Must match available models in your Open WebUI instance

|

||||

- Common examples: `gpt-4o`, `gpt-3.5-turbo`, `claude-3-sonnet`

|

||||

|

||||

**Session IDs:**

|

||||

|

||||

- Can be any unique string identifier

|

||||

- Recommendation: Use UUID format for consistency

|

||||

|

||||

**Knowledge File Status:**

|

||||

|

||||

- Valid values: `"processed"`, `"processing"`, `"error"`

|

||||

- Only use `"processed"` files for completions

|

||||

|

||||

@@ -878,6 +901,7 @@ Use the Open WebUI backend APIs to:

|

||||

7. **Fetch the final chat** - Retrieve and parse the completed conversation

|

||||

|

||||

**Enhanced Capabilities:**

|

||||

|

||||

- **RAG Integration** - Include knowledge collections for context-aware responses

|

||||

- **Asynchronous Processing** - Handle long-running AI operations with streaming or polling

|

||||

- **Response Parsing** - Clean and validate JSON responses from the assistant

|

||||

@@ -890,6 +914,7 @@ The key advantage of this approach is that it maintains full compatibility with

|

||||

## Testing

|

||||

|

||||

You can test your implementation by following the step-by-step CURL examples provided above. Make sure to replace placeholder values with your actual:

|

||||

|

||||

- Host URL

|

||||

- Authentication token

|

||||

- Chat IDs

|

||||

@@ -897,5 +922,7 @@ You can test your implementation by following the step-by-step CURL examples pro

|

||||

- Model names

|

||||

|

||||

:::tip

|

||||

|

||||

Start with a simple user message and gradually add complexity like knowledge integration and advanced features once the basic flow is working.

|

||||

|

||||

:::

|

||||

|

||||

@@ -4,10 +4,12 @@ title: "🌐 Browser Search Engine"

|

||||

---

|

||||

|

||||

:::warning

|

||||

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

|

||||

|

||||

:::

|

||||

|

||||

# Browser Search Engine Integration

|

||||

## Browser Search Engine Integration

|

||||

|

||||

Open WebUI allows you to integrate directly into your web browser. This tutorial will guide you through the process of setting up Open WebUI as a custom search engine, enabling you to execute queries easily from your browser's address bar.

|

||||

|

||||

@@ -57,7 +59,7 @@ WEBUI_URL=https://<your-open-webui-url>

|

||||

- **Keyword**: webui (or any keyword you prefer)

|

||||

- **URL with %s in place of query**:

|

||||

|

||||

```

|

||||

```txt

|

||||

https://<your-open-webui-url>/?q=%s

|

||||

```

|

||||

|

||||

@@ -79,17 +81,21 @@ Alternatively:

|

||||

|

||||

If you wish to utilize a specific model for your search, modify the URL format to include the model ID:

|

||||

|

||||

```

|

||||

```txt

|

||||

https://<your-open-webui-url>/?models=<model_id>&q=%s

|

||||

```

|

||||

|

||||

:::note

|

||||

|

||||

**Note:** The model ID will need to be URL-encoded. Special characters like spaces or slashes need to be encoded (e.g., `my model` becomes `my%20model`).

|

||||

|

||||

:::

|

||||

|

||||

## Example Usage

|

||||

|

||||

Once the search engine is set up, you can perform searches directly from the address bar. Simply type your chosen keyword followed by your query:

|

||||

|

||||

```

|

||||

```txt

|

||||

webui your search query

|

||||

```

|

||||

|

||||

|

||||

@@ -4,10 +4,12 @@ title: "⚛️ Continue.dev VS Code Extension with Open WebUI"

|

||||

---

|

||||

|

||||

:::warning

|

||||

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the [contributing tutorial](/docs/contributing.mdx).

|

||||

|

||||

:::

|

||||

|

||||

# Integrating Continue.dev VS Code Extension with Open WebUI

|

||||

## Integrating Continue.dev VS Code Extension with Open WebUI

|

||||

|

||||

## Download Extension

|

||||

|

||||

@@ -30,7 +32,7 @@ This will open the `config.yaml` file in your editor. Here you can change the se

|

||||

:::info

|

||||

|

||||

Currently the `ollama` provider does not support authentication so we cannot use this provider with Open WebUI.

|

||||

However Ollama and Open WebUI both have compatibility with OpenAI API spec. Read more about the specification in the [Ollama blog post](https://ollama.com/blog/openai-compatibility).

|

||||

However Ollama and Open WebUI both have compatibility with OpenAI API spec. Read more about the specification in the [Ollama blog post on OpenAI compatibility](https://ollama.com/blog/openai-compatibility).

|

||||

We can still setup continue.dev to use the openai provider which will allow us to use Open WebUI's authentication token.

|

||||

|

||||

:::

|

||||

@@ -150,7 +152,7 @@ env:

|

||||

### APIBase

|

||||

|

||||

This is a crucial step: you need to direct the continue.dev extension requests to your Open WebUI instance.

|

||||

Either use an actual domain name if the instance is hosted somewhere (e.g. `https://example.com/api`) or your localhost setup (e.g. `http://localhost:3000/api`).

|

||||

Either use an actual domain name if the instance is hosted somewhere (e.g., `https://example.com/api`) or your localhost setup (e.g., `http://localhost:3000/api`).

|

||||

You can find more information about the URLs in the [API Endpoints guide](/docs/getting-started/api-endpoints.md).

|

||||

|

||||

```yaml

|

||||

|

||||

@@ -4,10 +4,12 @@ title: "🛃 Setting up with Custom CA Store"

|

||||

---

|

||||

|

||||

:::warning

|

||||

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

|

||||

|

||||

:::

|

||||

|

||||

If you get an `[SSL: CERTIFICATE_VERIFY_FAILED]` error when trying to run OI, most likely the issue is that you are on a network which intercepts HTTPS traffic (e.g. a corporate network).

|

||||

If you get an `[SSL: CERTIFICATE_VERIFY_FAILED]` error when trying to run OI, most likely the issue is that you are on a network which intercepts HTTPS traffic (e.g., a corporate network).

|

||||

|

||||

To fix this, you will need to add the new cert into OI's truststore.

|

||||

|

||||

|

||||

@@ -3,36 +3,43 @@ sidebar_position: 1

|

||||

title: "🐋 Run DeepSeek R1 Dynamic 1.58-bit with Llama.cpp"

|

||||

---

|

||||

|

||||

A huge shoutout to **UnslothAI** for their incredible efforts! Thanks to their hard work, we can now run the **full DeepSeek-R1** 671B parameter model in its dynamic 1.58-bit quantized form (compressed to just 131GB) on **Llama.cpp**! And the best part? You no longer have to despair about needing massive enterprise-class GPUs or servers — it’s possible to run this model on your personal machine (albeit slowly for most consumer hardware).

|

||||

A huge shoutout to **UnslothAI** for their incredible efforts! Thanks to their hard work, we can now run the **full DeepSeek-R1** 671B parameter model in its dynamic 1.58-bit quantized form (compressed to just 131GB) on **Llama.cpp**! And the best part? You no longer have to despair about needing massive enterprise-class GPUs or servers — it’s possible to run this model on your personal machine (albeit slowly for most consumer hardware).

|

||||

|

||||

:::note

|

||||

|

||||

The only true **DeepSeek-R1** model on Ollama is the **671B version** available here: [https://ollama.com/library/deepseek-r1:671b](https://ollama.com/library/deepseek-r1:671b). Other versions are **distilled** models.

|

||||

|

||||

:::

|

||||

|

||||

This guide focuses on running the **full DeepSeek-R1 Dynamic 1.58-bit quantized model** using **Llama.cpp** integrated with **Open WebUI**. For this tutorial, we’ll demonstrate the steps with an **M4 Max + 128GB RAM** machine. You can adapt the settings to your own configuration.

|

||||

This guide focuses on running the **full DeepSeek-R1 Dynamic 1.58-bit quantized model** using **Llama.cpp** integrated with **Open WebUI**. For this tutorial, we’ll demonstrate the steps with an **M4 Max + 128GB RAM** machine. You can adapt the settings to your own configuration.

|

||||

|

||||

---

|

||||

|

||||

## Step 1: Install Llama.cpp

|

||||

## Step 1: Install Llama.cpp

|

||||

|

||||

You can either:

|

||||

- [Download the prebuilt binaries](https://github.com/ggerganov/llama.cpp/releases)

|

||||

- **Or build it yourself**: Follow the instructions here: [Llama.cpp Build Guide](https://github.com/ggerganov/llama.cpp/blob/master/docs/build.md)

|

||||

You can either:

|

||||

|

||||

## Step 2: Download the Model Provided by UnslothAI

|

||||

- [Download the prebuilt binaries](https://github.com/ggerganov/llama.cpp/releases)

|

||||

- **Or build it yourself**: Follow the instructions in the [Llama.cpp Build Guide](https://github.com/ggerganov/llama.cpp/blob/master/docs/build.md)

|

||||

|

||||

## Step 2: Download the Model Provided by UnslothAI

|

||||

|

||||

Head over to [Unsloth’s Hugging Face page](https://huggingface.co/unsloth/DeepSeek-R1-GGUF) and download the appropriate **dynamic quantized version** of DeepSeek-R1. For this tutorial, we’ll use the **1.58-bit (131GB)** version, which is highly optimized yet remains surprisingly functional.

|

||||

|

||||

|

||||

:::tip

|

||||

Know your "working directory" — where your Python script or terminal session is running. The model files will download to a subfolder of that directory by default, so be sure you know its path! For example, if you're running the command below in `/Users/yourname/Documents/projects`, your downloaded model will be saved under `/Users/yourname/Documents/projects/DeepSeek-R1-GGUF`.

|

||||

|

||||

Know your "working directory" — where your Python script or terminal session is running. The model files will download to a subfolder of that directory by default, so be sure you know its path! For example, if you're running the command below in `/Users/yourname/Documents/projects`, your downloaded model will be saved under `/Users/yourname/Documents/projects/DeepSeek-R1-GGUF`.

|

||||

|

||||

:::

|

||||

|

||||

To understand more about UnslothAI’s development process and why these dynamic quantized versions are so efficient, check out their blog post: [UnslothAI DeepSeek R1 Dynamic Quantization](https://unsloth.ai/blog/deepseekr1-dynamic).

|

||||

To understand more about UnslothAI’s development process and why these dynamic quantized versions are so efficient, check out their blog post: [UnslothAI DeepSeek R1 Dynamic Quantization](https://unsloth.ai/blog/deepseekr1-dynamic).

|

||||

|

||||

Here’s how to download the model programmatically:

|

||||

|

||||

Here’s how to download the model programmatically:

|

||||

```python

|

||||

|

||||

# Install Hugging Face dependencies before running this:

|

||||

|

||||

# pip install huggingface_hub hf_transfer

|

||||

|

||||

from huggingface_hub import snapshot_download

|

||||

@@ -44,8 +51,9 @@ snapshot_download(

|

||||

)

|

||||

```

|

||||

|

||||

Once the download completes, you’ll find the model files in a directory structure like this:

|

||||

```

|

||||

Once the download completes, you’ll find the model files in a directory structure like this:

|

||||

|

||||

```txt

|

||||

DeepSeek-R1-GGUF/

|

||||

├── DeepSeek-R1-UD-IQ1_S/

|

||||

│ ├── DeepSeek-R1-UD-IQ1_S-00001-of-00003.gguf

|

||||

@@ -54,34 +62,38 @@ DeepSeek-R1-GGUF/

|

||||

```

|

||||

|

||||

:::info

|

||||

🛠️ Update paths in the later steps to **match your specific directory structure**. For example, if your script was in `/Users/tim/Downloads`, the full path to the GGUF file would be:

|

||||

|

||||

🛠️ Update paths in the later steps to **match your specific directory structure**. For example, if your script was in `/Users/tim/Downloads`, the full path to the GGUF file would be:

|

||||

`/Users/tim/Downloads/DeepSeek-R1-GGUF/DeepSeek-R1-UD-IQ1_S/DeepSeek-R1-UD-IQ1_S-00001-of-00003.gguf`.

|

||||

|

||||

:::

|

||||

|

||||

## Step 3: Make Sure Open WebUI is Installed and Running

|

||||

## Step 3: Make Sure Open WebUI is Installed and Running

|

||||

|

||||

If you don’t already have **Open WebUI** installed, no worries! It’s a simple setup. Just follow the [Open WebUI documentation here](https://docs.openwebui.com/). Once installed, start the application — we’ll connect it in a later step to interact with the DeepSeek-R1 model.

|

||||

If you don’t already have **Open WebUI** installed, no worries! It’s a simple setup. Just follow the [Open WebUI documentation](https://docs.openwebui.com/). Once installed, start the application — we’ll connect it in a later step to interact with the DeepSeek-R1 model.

|

||||

|

||||

## Step 4: Serve the Model Using Llama.cpp

|

||||

|

||||

## Step 4: Serve the Model Using Llama.cpp

|

||||

Now that the model is downloaded, the next step is to run it using **Llama.cpp’s server mode**. Before you begin:

|

||||

|

||||

Now that the model is downloaded, the next step is to run it using **Llama.cpp’s server mode**. Before you begin:

|

||||

1. **Locate the `llama-server` binary.**

|

||||

If you built from source (as outlined in Step 1), the `llama-server` executable will be located in `llama.cpp/build/bin`. Navigate to this directory by using the `cd` command:

|

||||

|

||||

1. **Locate the `llama-server` binary.**

|

||||

If you built from source (as outlined in Step 1), the `llama-server` executable will be located in `llama.cpp/build/bin`. Navigate to this directory by using the `cd` command:

|

||||

```bash

|

||||

cd [path-to-llama-cpp]/llama.cpp/build/bin

|

||||

```

|

||||

|

||||

Replace `[path-to-llama-cpp]` with the location where you cloned or built Llama.cpp. For example:

|

||||

Replace `[path-to-llama-cpp]` with the location where you cloned or built Llama.cpp. For example:

|

||||

|

||||

```bash

|

||||

cd ~/Documents/workspace/llama.cpp/build/bin

|

||||

```

|

||||

|

||||

2. **Point to your model folder.**

|

||||

Use the full path to the downloaded GGUF files created in Step 2. When serving the model, specify the first part of the split GGUF files (e.g., `DeepSeek-R1-UD-IQ1_S-00001-of-00003.gguf`).

|

||||

2. **Point to your model folder.**

|

||||

Use the full path to the downloaded GGUF files created in Step 2. When serving the model, specify the first part of the split GGUF files (e.g., `DeepSeek-R1-UD-IQ1_S-00001-of-00003.gguf`).

|

||||

|

||||

Here’s the command to start the server:

|

||||

|

||||

Here’s the command to start the server:

|

||||

```bash

|

||||

./llama-server \

|

||||

--model /[your-directory]/DeepSeek-R1-GGUF/DeepSeek-R1-UD-IQ1_S/DeepSeek-R1-UD-IQ1_S-00001-of-00003.gguf \

|

||||

@@ -90,17 +102,19 @@ Here’s the command to start the server:

|

||||

--n-gpu-layers 40

|

||||

```

|

||||

|

||||

|

||||

:::tip

|

||||

🔑 **Parameters to Customize Based on Your Machine:**

|

||||

|

||||

- **`--model`:** Replace `/[your-directory]/` with the path where the GGUF files were downloaded in Step 2.

|

||||

- **`--port`:** The server default is `8080`, but feel free to change it based on your port availability.

|

||||

- **`--ctx-size`:** Determines context length (number of tokens). You can increase it if your hardware allows, but be cautious of rising RAM/VRAM usage.

|

||||

🔑 **Parameters to Customize Based on Your Machine:**

|

||||

|

||||

- **`--model`:** Replace `/[your-directory]/` with the path where the GGUF files were downloaded in Step 2.

|

||||

- **`--port`:** The server default is `8080`, but feel free to change it based on your port availability.

|

||||

- **`--ctx-size`:** Determines context length (number of tokens). You can increase it if your hardware allows, but be cautious of rising RAM/VRAM usage.

|

||||

- **`--n-gpu-layers`:** Set the number of layers you want to offload to your GPU for faster inference. The exact number depends on your GPU’s memory capacity — reference Unsloth’s table for specific recommendations.

|

||||

|

||||

:::

|

||||

|

||||

For example, if your model was downloaded to `/Users/tim/Documents/workspace`, your command would look like this:

|

||||

For example, if your model was downloaded to `/Users/tim/Documents/workspace`, your command would look like this:

|

||||

|

||||

```bash

|

||||

./llama-server \

|

||||

--model /Users/tim/Documents/workspace/DeepSeek-R1-GGUF/DeepSeek-R1-UD-IQ1_S/DeepSeek-R1-UD-IQ1_S-00001-of-00003.gguf \

|

||||

@@ -109,61 +123,66 @@ For example, if your model was downloaded to `/Users/tim/Documents/workspace`, y

|

||||

--n-gpu-layers 40

|

||||

```

|

||||

|

||||

Once the server starts, it will host a **local OpenAI-compatible API** endpoint at:

|

||||

```

|

||||

Once the server starts, it will host a **local OpenAI-compatible API** endpoint at:

|

||||

|

||||

```txt

|

||||

http://127.0.0.1:10000

|

||||

```

|

||||

|

||||

:::info

|

||||

🖥️ **Llama.cpp Server Running**

|

||||

|

||||

|

||||

🖥️ **Llama.cpp Server Running**

|

||||

|

||||

|

||||

|

||||

After running the command, you should see a message confirming the server is active and listening on port 10000.

|

||||

|

||||

:::

|

||||

|

||||

Be sure to **keep this terminal session running**, as it serves the model for all subsequent steps.

|

||||

|

||||

## Step 5: Connect Llama.cpp to Open WebUI

|

||||

## Step 5: Connect Llama.cpp to Open WebUI

|

||||

|

||||

1. Go to **Admin Settings** in Open WebUI.

|

||||

2. Navigate to **Connections > OpenAI Connections.**

|

||||

3. Add the following details for the new connection:

|

||||

1. Go to **Admin Settings** in Open WebUI.

|

||||

2. Navigate to **Connections > OpenAI Connections.**

|

||||

3. Add the following details for the new connection:

|

||||

- URL: `http://127.0.0.1:10000/v1` (or `http://host.docker.internal:10000/v1` when running Open WebUI in docker)

|

||||

- API Key: `none`

|

||||

|

||||

:::info

|

||||

🖥️ **Adding Connection in Open WebUI**

|

||||

|

||||

|

||||

🖥️ **Adding Connection in Open WebUI**

|

||||

|

||||

|

||||

|

||||

After running the command, you should see a message confirming the server is active and listening on port 10000.

|

||||

|

||||

:::

|

||||

|

||||

Once the connection is saved, you can start querying **DeepSeek-R1** directly from Open WebUI! 🎉

|

||||

Once the connection is saved, you can start querying **DeepSeek-R1** directly from Open WebUI! 🎉

|

||||

|

||||

---

|

||||

|

||||

## Example: Generating Responses

|

||||

## Example: Generating Responses

|

||||

|

||||

You can now use Open WebUI’s chat interface to interact with the **DeepSeek-R1 Dynamic 1.58-bit model**.

|

||||

You can now use Open WebUI’s chat interface to interact with the **DeepSeek-R1 Dynamic 1.58-bit model**.

|

||||

|

||||

|

||||

|

||||

|

||||

---

|

||||

|

||||

## Notes and Considerations

|

||||

## Notes and Considerations

|

||||

|

||||

- **Performance:**

|

||||

Running a massive 131GB model like DeepSeek-R1 on personal hardware will be **slow**. Even with our M4 Max (128GB RAM), inference speeds were modest. But the fact that it works at all is a testament to UnslothAI’s optimizations.

|

||||

- **Performance:**

|

||||

Running a massive 131GB model like DeepSeek-R1 on personal hardware will be **slow**. Even with our M4 Max (128GB RAM), inference speeds were modest. But the fact that it works at all is a testament to UnslothAI’s optimizations.

|

||||

|

||||

- **VRAM/Memory Requirements:**

|

||||

Ensure sufficient VRAM and system RAM for optimal performance. With low-end GPUs or CPU-only setups, expect slower speeds (but it’s still doable!).

|

||||

- **VRAM/Memory Requirements:**

|

||||

Ensure sufficient VRAM and system RAM for optimal performance. With low-end GPUs or CPU-only setups, expect slower speeds (but it’s still doable!).

|

||||

|

||||

---

|

||||

|

||||

Thanks to **UnslothAI** and **Llama.cpp**, running one of the largest open-source reasoning models, **DeepSeek-R1** (1.58-bit version), is finally accessible to individuals. While it’s challenging to run such models on consumer hardware, the ability to do so without massive computational infrastructure is a significant technological milestone.

|

||||

Thanks to **UnslothAI** and **Llama.cpp**, running one of the largest open-source reasoning models, **DeepSeek-R1** (1.58-bit version), is finally accessible to individuals. While it’s challenging to run such models on consumer hardware, the ability to do so without massive computational infrastructure is a significant technological milestone.

|

||||

|

||||

⭐ Big thanks to the community for pushing the boundaries of open AI research.

|

||||

⭐ Big thanks to the community for pushing the boundaries of open AI research.

|

||||

|

||||

Happy experimenting! 🚀

|

||||

Happy experimenting! 🚀

|

||||

|

||||

@@ -4,26 +4,28 @@ title: "🦊 Firefox AI Chatbot Sidebar"

|

||||

---

|

||||

|

||||

:::warning

|

||||

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

|

||||

|

||||

:::

|

||||

|

||||

## 🦊 Firefox AI Chatbot Sidebar

|

||||

|

||||

# Integrating Open WebUI as a Local AI Chatbot Browser Assistant in Mozilla Firefox

|

||||

## Integrating Open WebUI as a Local AI Chatbot Browser Assistant in Mozilla Firefox

|

||||

|

||||

## Prerequisites

|

||||

|

||||

Before integrating Open WebUI as a AI chatbot browser assistant in Mozilla Firefox, ensure you have:

|

||||

|

||||

* Open WebUI instance URL (local or domain)

|

||||

* Firefox browser installed

|

||||

- Open WebUI instance URL (local or domain)

|

||||

- Firefox browser installed

|

||||

|

||||

## Enabling AI Chatbot in Firefox

|

||||

|

||||

1. Click on the hamburger button (three horizontal lines button at the top right corner, just below the `X` button)

|

||||

2. Open up Firefox settings

|

||||

2. Click on the `Firefox Labs` section

|

||||

3. Toggle on `AI Chatbot`

|

||||

3. Click on the `Firefox Labs` section

|

||||

4. Toggle on `AI Chatbot`

|

||||

|

||||

Alternatively, you can enable AI Chatbot through the `about:config` page (described in the next section).

|

||||

|

||||

@@ -34,11 +36,11 @@ Alternatively, you can enable AI Chatbot through the `about:config` page (descri

|

||||

3. Search for `browser.ml.chat.enabled` and toggle it to `true` if it's not already enabled through Firefox Labs

|

||||

4. Search for `browser.ml.chat.hideLocalhost` and toggle it to `false`

|

||||

|

||||

### browser.ml.chat.prompts.#

|

||||

### browser.ml.chat.prompts.{#}

|

||||

|

||||

To add custom prompts, follow these steps:

|

||||

|

||||

1. Search for `browser.ml.chat.prompts.#` (replace `#` with a number, e.g., `0`, `1`, `2`, etc.)

|

||||

1. Search for `browser.ml.chat.prompts.{#}` (replace `{#}` with a number, e.g., `0`, `1`, `2`, etc.)

|

||||

2. Click the `+` button to add a new prompt

|

||||

3. Enter the prompt label, value, and ID (e.g., `{"id":"My Prompt", "value": "This is my custom prompt.", "label": "My Prompt"}`)

|

||||

4. Repeat the process to add more prompts as desired

|

||||

@@ -54,32 +56,32 @@ The following URL parameters can be used to customize your Open WebUI instance:

|

||||

|

||||

### Models and Model Selection

|

||||

|

||||

* `models`: Specify multiple models (comma-separated list) for the chat session (e.g., `/?models=model1,model2`)

|

||||

* `model`: Specify a single model for the chat session (e.g., `/?model=model1`)

|

||||

- `models`: Specify multiple models (comma-separated list) for the chat session (e.g., `/?models=model1,model2`)

|

||||

- `model`: Specify a single model for the chat session (e.g., `/?model=model1`)

|

||||

|

||||

### YouTube Transcription

|

||||

|

||||

* `youtube`: Provide a YouTube video ID to transcribe the video in the chat (e.g., `/?youtube=VIDEO_ID`)

|

||||

- `youtube`: Provide a YouTube video ID to transcribe the video in the chat (e.g., `/?youtube=VIDEO_ID`)

|

||||

|

||||

### Web Search

|

||||

|

||||

* `web-search`: Enable web search functionality by setting this parameter to `true` (e.g., `/?web-search=true`)

|

||||

- `web-search`: Enable web search functionality by setting this parameter to `true` (e.g., `/?web-search=true`)

|

||||

|

||||

### Tool Selection

|

||||

|

||||

* `tools` or `tool-ids`: Specify a comma-separated list of tool IDs to activate in the chat (e.g., `/?tools=tool1,tool2` or `/?tool-ids=tool1,tool2`)

|

||||

- `tools` or `tool-ids`: Specify a comma-separated list of tool IDs to activate in the chat (e.g., `/?tools=tool1,tool2` or `/?tool-ids=tool1,tool2`)

|

||||

|

||||

### Call Overlay

|

||||

|

||||

* `call`: Enable a video or call overlay in the chat interface by setting this parameter to `true` (e.g., `/?call=true`)

|

||||

- `call`: Enable a video or call overlay in the chat interface by setting this parameter to `true` (e.g., `/?call=true`)

|

||||

|

||||

### Initial Query Prompt

|

||||

|

||||

* `q`: Set an initial query or prompt for the chat (e.g., `/?q=Hello%20there`)

|

||||

- `q`: Set an initial query or prompt for the chat (e.g., `/?q=Hello%20there`)

|

||||

|

||||

### Temporary Chat Sessions

|

||||

|

||||

* `temporary-chat`: Mark the chat as a temporary session by setting this parameter to `true` (e.g., `/?temporary-chat=true`)

|

||||

- `temporary-chat`: Mark the chat as a temporary session by setting this parameter to `true` (e.g., `/?temporary-chat=true`)

|

||||

|

||||

See https://docs.openwebui.com/features/chat-features/url-params for more info on URL parameters and how to use them.

|

||||

|

||||

@@ -87,26 +89,26 @@ See https://docs.openwebui.com/features/chat-features/url-params for more info o

|

||||

|

||||

The following `about:config` settings can be adjusted for further customization:

|

||||

|

||||

* `browser.ml.chat.shortcuts`: Enable custom shortcuts for the AI chatbot sidebar

|

||||

* `browser.ml.chat.shortcuts.custom`: Enable custom shortcut keys for the AI chatbot sidebar

|

||||

* `browser.ml.chat.shortcuts.longPress`: Set the long press delay for shortcut keys

|

||||

* `browser.ml.chat.sidebar`: Enable the AI chatbot sidebar

|

||||

* `browser.ml.checkForMemory`: Check for available memory before loading models

|

||||

* `browser.ml.defaultModelMemoryUsage`: Set the default memory usage for models

|

||||

* `browser.ml.enable`: Enable the machine learning features in Firefox

|

||||

* `browser.ml.logLevel`: Set the log level for machine learning features

|

||||

* `browser.ml.maximumMemoryPressure`: Set the maximum memory pressure threshold

|

||||

* `browser.ml.minimumPhysicalMemory`: Set the minimum physical memory required

|

||||

* `browser.ml.modelCacheMaxSize`: Set the maximum size of the model cache

|

||||

* `browser.ml.modelCacheTimeout`: Set the timeout for model cache

|

||||

* `browser.ml.modelHubRootUrl`: Set the root URL for the model hub

|

||||

* `browser.ml.modelHubUrlTemplate`: Set the URL template for the model hub

|

||||

* `browser.ml.queueWaitInterval`: Set the interval for queue wait

|

||||

* `browser.ml.queueWaitTimeout`: Set the timeout for queue wait

|

||||

- `browser.ml.chat.shortcuts`: Enable custom shortcuts for the AI chatbot sidebar

|

||||

- `browser.ml.chat.shortcuts.custom`: Enable custom shortcut keys for the AI chatbot sidebar

|

||||

- `browser.ml.chat.shortcuts.longPress`: Set the long press delay for shortcut keys

|

||||

- `browser.ml.chat.sidebar`: Enable the AI chatbot sidebar

|

||||

- `browser.ml.checkForMemory`: Check for available memory before loading models

|

||||

- `browser.ml.defaultModelMemoryUsage`: Set the default memory usage for models

|

||||

- `browser.ml.enable`: Enable the machine learning features in Firefox

|

||||

- `browser.ml.logLevel`: Set the log level for machine learning features

|

||||

- `browser.ml.maximumMemoryPressure`: Set the maximum memory pressure threshold

|

||||

- `browser.ml.minimumPhysicalMemory`: Set the minimum physical memory required

|

||||

- `browser.ml.modelCacheMaxSize`: Set the maximum size of the model cache

|

||||

- `browser.ml.modelCacheTimeout`: Set the timeout for model cache

|

||||

- `browser.ml.modelHubRootUrl`: Set the root URL for the model hub

|

||||

- `browser.ml.modelHubUrlTemplate`: Set the URL template for the model hub

|

||||

- `browser.ml.queueWaitInterval`: Set the interval for queue wait

|

||||

- `browser.ml.queueWaitTimeout`: Set the timeout for queue wait

|

||||

|

||||

## Accessing the AI Chatbot Sidebar

|

||||

|

||||

To access the AI chatbot sidebar, use one of the following methods:

|

||||

|

||||

* Press `CTRL+B` to open the bookmarks sidebar and switch to AI Chatbot

|

||||

* Press `CTRL+Alt+X` to open the AI chatbot sidebar directly

|

||||

- Press `CTRL+B` to open the bookmarks sidebar and switch to AI Chatbot

|

||||

- Press `CTRL+Alt+X` to open the AI chatbot sidebar directly

|

||||

|

||||

@@ -4,10 +4,12 @@ sidebar_position: 19

|

||||

---

|

||||

|

||||

:::warning

|

||||

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

|

||||

|

||||

:::

|

||||

|

||||

# Helicone Integration with Open WebUI

|

||||

## Helicone Integration with Open WebUI

|

||||

|

||||

Helicone is an open-source LLM observability platform for developers to monitor, debug, and improve **production-ready** applications, including your Open WebUI deployment.

|

||||

|

||||

@@ -21,7 +23,6 @@ By enabling Helicone, you can log LLM requests, evaluate and experiment with pro

|

||||

- **Debug capabilities** to troubleshoot issues with model responses

|

||||

- **Cost tracking** for your LLM usage across providers

|

||||

|

||||

|

||||

## How to integrate Helicone with OpenWebUI

|

||||

|

||||

<iframe

|

||||

@@ -36,7 +37,7 @@ By enabling Helicone, you can log LLM requests, evaluate and experiment with pro

|

||||

|

||||

### Step 1: Create a Helicone account and generate your API key

|

||||

|

||||

Create a [Helicone account](https://www.helicone.ai/) and log in to generate an [API key here](https://us.helicone.ai/settings/api-keys).

|

||||

Create a [Helicone account](https://www.helicone.ai/) and log in to generate an [API key](https://us.helicone.ai/settings/api-keys).

|

||||

|

||||

*— Make sure to generate a [write only API key](https://docs.helicone.ai/helicone-headers/helicone-auth). This ensures you only allow logging data to Helicone without read access to your private data.*

|

||||

|

||||

@@ -78,4 +79,4 @@ You should see the requests you have made through your Open WebUI interface alre

|

||||

|

||||

## Learn more

|

||||

|

||||

For a comprehensive guide on Helicone, you can check out [Helicone's documentation here](https://docs.helicone.ai/getting-started/quick-start).

|

||||

For a comprehensive guide on Helicone, you can check out [Helicone's documentation](https://docs.helicone.ai/getting-started/quick-start).

|

||||

|

||||

@@ -4,17 +4,23 @@ title: "🖥️ Local LLM Setup with IPEX-LLM on Intel GPU"

|

||||

---

|

||||

|

||||

:::warning

|

||||

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

|

||||

|

||||

:::

|

||||

|

||||

:::note

|

||||

This guide is verified with Open WebUI setup through [Manual Installation](/getting-started/index.md).

|

||||

|

||||

This guide is verified with Open WebUI setup through [Manual Installation](docs/getting-started/index.md).

|

||||

|

||||

:::

|

||||

|

||||

# Local LLM Setup with IPEX-LLM on Intel GPU

|

||||

## Local LLM Setup with IPEX-LLM on Intel GPU

|

||||

|

||||

:::info

|

||||

|

||||

[**IPEX-LLM**](https://github.com/intel-analytics/ipex-llm) is a PyTorch library for running LLM on Intel CPU and GPU (e.g., local PC with iGPU, discrete GPU such as Arc A-Series, Flex and Max) with very low latency.

|

||||

|

||||

:::

|

||||

|

||||

This tutorial demonstrates how to setup Open WebUI with **IPEX-LLM accelerated Ollama backend hosted on Intel GPU**. By following this guide, you will be able to setup Open WebUI even on a low-cost PC (i.e. only with integrated GPU) with a smooth experience.

|

||||

@@ -24,7 +30,9 @@ This tutorial demonstrates how to setup Open WebUI with **IPEX-LLM accelerated O

|

||||

Refer to [this guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/ollama_quickstart.html) from IPEX-LLM official documentation about how to install and run Ollama serve accelerated by IPEX-LLM on Intel GPU.

|

||||

|

||||

:::tip

|

||||

|

||||

If you would like to reach the Ollama service from another machine, make sure you set or export the environment variable `OLLAMA_HOST=0.0.0.0` before executing the command `ollama serve`.

|

||||

|

||||

:::

|

||||

|

||||

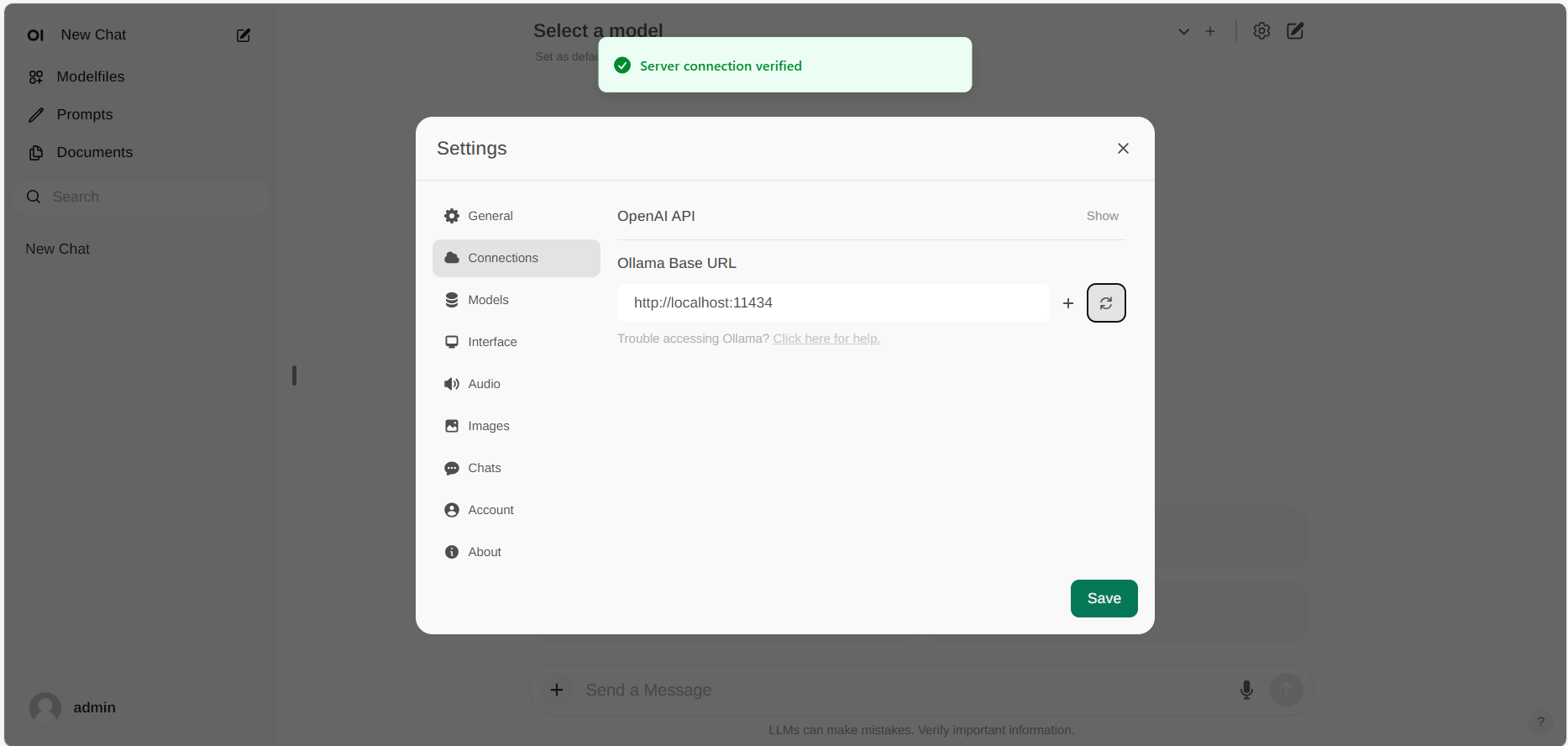

## Configure Open WebUI

|

||||

@@ -38,5 +46,7 @@ If the connection is successful, you will see a message stating `Service Connect

|

||||

|

||||

|

||||

:::tip

|

||||

|

||||

If you want to use an Ollama server hosted at a different URL, simply update the **Ollama Base URL** to the new URL and press the **Refresh** button to re-confirm the connection to Ollama.

|

||||

|

||||

:::

|

||||

|

||||

@@ -3,7 +3,9 @@ title: "💻 Iterm2 AI Integration"

|

||||

---

|

||||

|

||||

:::warning

|

||||

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the [contributing tutorial](/docs/contributing.mdx).

|

||||

|

||||

:::

|

||||

|

||||

# Use your Open WebUI models with Iterm2

|

||||

@@ -68,7 +70,7 @@ whole response will be copied into my terminal automatically.

|

||||

The script should do this: \(ai.prompt)

|

||||

```

|

||||

|

||||

You can read more about the Iterm2 prompt in the [original documentation](https://gitlab.com/gnachman/iterm2/-/wikis/AI-Prompt).

|

||||

You can read more about the Iterm2 prompt in the [Iterm2 documentation](https://gitlab.com/gnachman/iterm2/-/wikis/AI-Prompt).

|

||||

|

||||

---

|

||||

|

||||

@@ -76,7 +78,7 @@ You can read more about the Iterm2 prompt in the [original documentation](https:

|

||||

|

||||

Since the iTerm2 AI plugin does not automatically list your custom models, you'll need to add your preferred one manually.

|

||||

In your Open WebUI instance, navigate to `Admin Panel` > `Settings` > `Models`, and then click on your preferred LLM.

|

||||

Below the user-given name, you'll find the actual model name that you need to enter into iTerm2 (e.g. name: Gemma3 - model name: `/models/gemma3-27b-it-Q4_K_M.gguf`).

|

||||

Below the user-given name, you'll find the actual model name that you need to enter into iTerm2 (e.g., name: Gemma3 - model name: `/models/gemma3-27b-it-Q4_K_M.gguf`).

|

||||

|

||||

---

|

||||

|

||||

@@ -89,7 +91,7 @@ Set your preferred amount of tokens here. Typically, your inference tool will al

|

||||

### Adjust the URL

|

||||

|

||||

This is a crucial step: you need to direct the iTerm2 AI plugin requests to your Open WebUI instance.

|

||||

Either use an actual domain name if the instance is hosted somewhere (e.g. `https://example.com/api/chat/completions`) or your localhost setup (e.g. `http://localhost:8080/api/chat/completions`).

|

||||

Either use an actual domain name if the instance is hosted somewhere (e.g., `https://example.com/api/chat/completions`) or your localhost setup (e.g., `http://localhost:8080/api/chat/completions`).

|

||||

You can find more information about the URLs in the [API Endpoints guide](/docs/getting-started/api-endpoints.md).

|

||||

|

||||

---

|

||||

|

||||

@@ -3,7 +3,7 @@ sidebar_position: 20

|

||||

title: "🪢 Monitoring and Debugging with Langfuse"

|

||||

---

|

||||

|

||||

# Langfuse Integration with Open WebUI

|

||||

## Langfuse Integration with Open WebUI

|

||||

|

||||

[Langfuse](https://langfuse.com/) ([GitHub](https://github.com/langfuse/langfuse)) offers open source observability and evaluations for Open WebUI. By enabling the Langfuse integration, you can trace your application data with Langfuse to develop, monitor, and improve the use of Open WebUI, including:

|

||||

|

||||

@@ -16,7 +16,7 @@ title: "🪢 Monitoring and Debugging with Langfuse"

|

||||

## How to integrate Langfuse with Open WebUI

|

||||

|

||||

|

||||

_Langfuse integration steps_

|

||||

*Langfuse integration steps*

|

||||

|

||||

[Pipelines](https://github.com/open-webui/pipelines/) in Open WebUI is an UI-agnostic framework for OpenAI API plugins. It enables the injection of plugins that intercept, process, and forward user prompts to the final LLM, allowing for enhanced control and customization of prompt handling.

|

||||

|

||||

@@ -38,7 +38,7 @@ docker run -p 9099:9099 --add-host=host.docker.internal:host-gateway -v pipeline

|

||||

|

||||

### Step 3: Connecting Open WebUI with Pipelines

|

||||

|

||||

In the _Admin Settings_, create and save a new connection of type OpenAI API with the following details:

|

||||

In the *Admin Settings*, create and save a new connection of type OpenAI API with the following details:

|

||||

|

||||

- **URL:** http://host.docker.internal:9099 (this is where the previously launched Docker container is running).

|

||||

- **Password:** 0p3n-w3bu! (standard password)

|

||||

@@ -47,9 +47,9 @@ In the _Admin Settings_, create and save a new connection of type OpenAI API wit

|

||||

|

||||

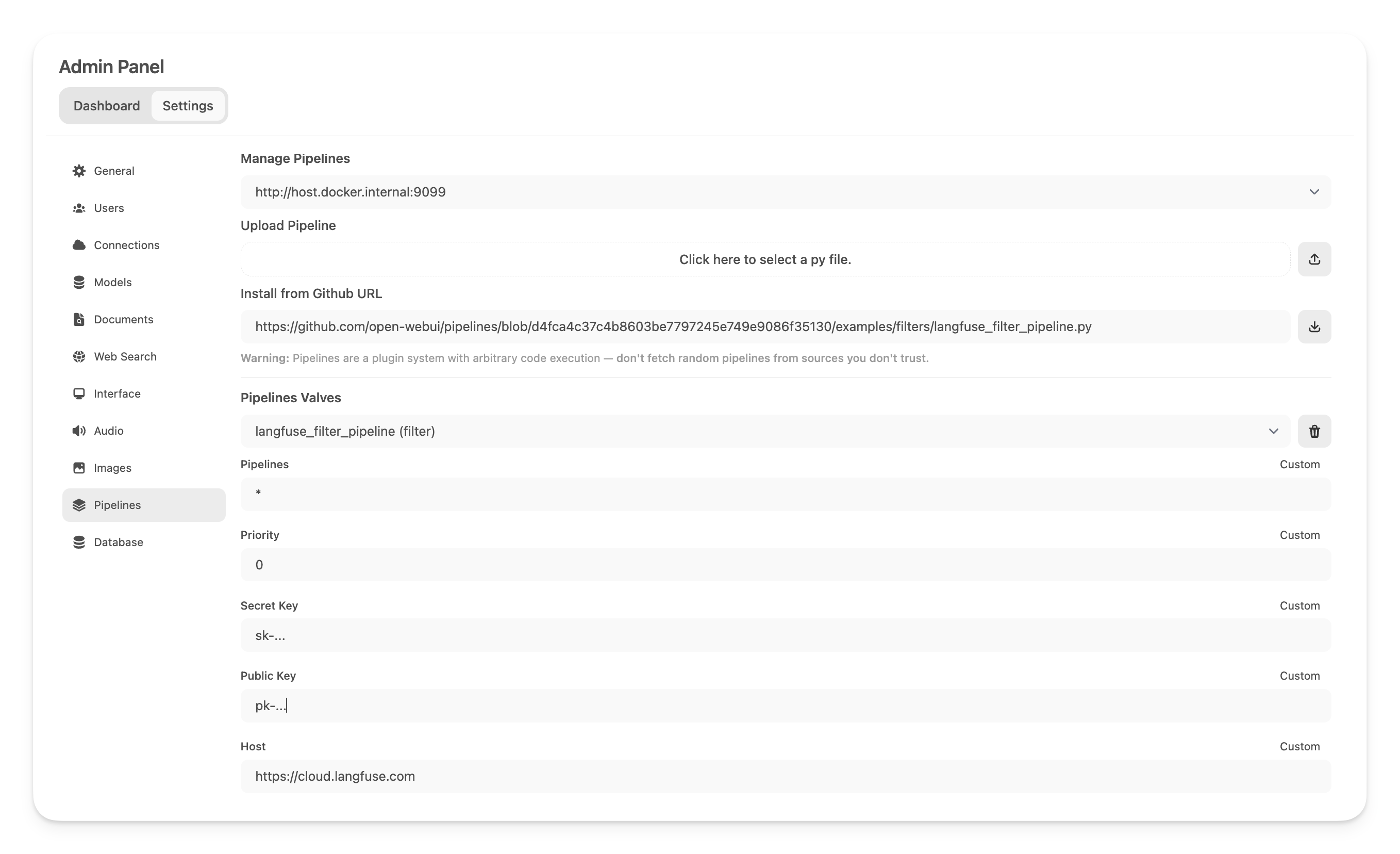

### Step 4: Adding the Langfuse Filter Pipeline

|

||||

|

||||

Next, navigate to _Admin Settings_ -> _Pipelines_ and add the Langfuse Filter Pipeline. Specify that Pipelines is listening on http://host.docker.internal:9099 (as configured earlier) and install the [Langfuse Filter Pipeline](https://github.com/open-webui/pipelines/blob/039f9c54f8e9f9bcbabde02c2c853e80d25c79e4/examples/filters/langfuse_v3_filter_pipeline.py) by using the _Install from Github URL_ option with the following URL:

|

||||

Next, navigate to *Admin Settings*->*Pipelines* and add the Langfuse Filter Pipeline. Specify that Pipelines is listening on http://host.docker.internal:9099 (as configured earlier) and install the [Langfuse Filter Pipeline](https://github.com/open-webui/pipelines/blob/039f9c54f8e9f9bcbabde02c2c853e80d25c79e4/examples/filters/langfuse_v3_filter_pipeline.py) by using the *Install from Github URL* option with the following URL:

|

||||

|

||||

```

|

||||

```txt

|

||||

https://github.com/open-webui/pipelines/blob/main/examples/filters/langfuse_v3_filter_pipeline.py

|

||||

```

|

||||

|

||||

@@ -57,7 +57,11 @@ Now, add your Langfuse API keys below. If you haven't signed up to Langfuse yet,

|

||||

|

||||

|

||||

|

||||

_**Note:** Capture usage (token counts) for OpenAi models while streaming is enabled, you have to navigate to the model settings in Open WebUI and check the "Usage" [box](https://github.com/open-webui/open-webui/discussions/5770#discussioncomment-10778586) below _Capabilities_._

|

||||

:::note

|

||||

|

||||

***Note:** Capture usage (token counts) for OpenAi models while streaming is enabled, you have to navigate to the model settings in Open WebUI and check the "Usage" [box](https://github.com/open-webui/open-webui/discussions/5770#discussioncomment-10778586) below *Capabilities*.*

|

||||

|

||||

:::

|

||||

|

||||

### Step 5: See your traces in Langfuse

|

||||

|

||||

|

||||

@@ -4,16 +4,16 @@ title: "🔠 LibreTranslate Integration"

|

||||

---

|

||||

|

||||

:::warning

|

||||

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

|

||||

|

||||

:::

|

||||

|

||||

Overview

|

||||

--------

|

||||

## Overview

|

||||

|

||||

LibreTranslate is a free and open-source machine translation API that supports a wide range of languages. LibreTranslate is a self hosted, offline capable, and easy to setup, and unlike other APIs, it doesn't rely on proprietary providers such as Google or Azure to perform translations. Instead, its translation engine is powered by the open source [Argos Translate](https://github.com/argosopentech/argos-translate) library. You can integrate LibreTranslate with Open WebUI to leverage its machine translation capabilities. This documentation provides a step-by-step guide to setting up LibreTranslate in Docker and configuring the integration within Open WebUI.

|

||||

|

||||

Setting up LibreTranslate in Docker

|

||||

-----------------------------------

|

||||

## Setting up LibreTranslate in Docker

|

||||

|

||||

To set up LibreTranslate in Docker, follow these steps:

|

||||

|

||||

@@ -38,7 +38,7 @@ services:

|

||||

stdin_open: true

|

||||

healthcheck:

|

||||

test: ['CMD-SHELL', './venv/bin/python scripts/healthcheck.py']

|

||||

|

||||

|

||||

volumes:

|

||||

libretranslate_models:

|

||||

libretranslate_api_keys:

|

||||

@@ -49,6 +49,7 @@ volumes:

|

||||

Create a new file named `stack.env` in the same directory as your `docker-compose.yml` file. Add the following configuration to the file:

|

||||

|

||||

```bash

|

||||

|

||||

# LibreTranslate

|

||||

LT_DEBUG="false"

|

||||

LT_UPDATE_MODELS="true"

|

||||

@@ -73,41 +74,38 @@ docker-compose up -d

|

||||

|

||||

This will start the LibreTranslate service in detached mode.

|

||||

|

||||

Configuring the Integration in Open WebUI

|

||||

-------------------------------------------

|

||||

## Configuring the Integration in Open WebUI

|

||||

|

||||

Once you have LibreTranslate up and running in Docker, you can configure the integration within Open WebUI. There are several community integrations available, including:

|

||||

|

||||

* [LibreTranslate Filter Function](https://openwebui.com/f/iamg30/libretranslate_filter)

|

||||

* [LibreTranslate Action Function](https://openwebui.com/f/jthesse/libretranslate_action)

|

||||

* [MultiLanguage LibreTranslate Action Function](https://openwebui.com/f/iamg30/multilanguage_libretranslate_action)

|

||||

* [LibreTranslate Filter Pipeline](https://github.com/open-webui/pipelines/blob/main/examples/filters/libretranslate_filter_pipeline.py)

|

||||

- [LibreTranslate Filter Function](https://openwebui.com/f/iamg30/libretranslate_filter)

|

||||

- [LibreTranslate Action Function](https://openwebui.com/f/jthesse/libretranslate_action)

|

||||

- [MultiLanguage LibreTranslate Action Function](https://openwebui.com/f/iamg30/multilanguage_libretranslate_action)

|

||||

- [LibreTranslate Filter Pipeline](https://github.com/open-webui/pipelines/blob/main/examples/filters/libretranslate_filter_pipeline.py)

|

||||

|

||||

Choose the integration that best suits your needs and follow the instructions to configure it within Open WebUI.

|

||||

|

||||

Supported languages for the LibreTranslate pipeline & function:

|

||||

Really just all the languages that can be found within LibreTranslate, but here is the list:

|

||||

```

|

||||

|

||||

```txt

|

||||